AWS ECS Guide in Python

What is it (without aws lingo)?

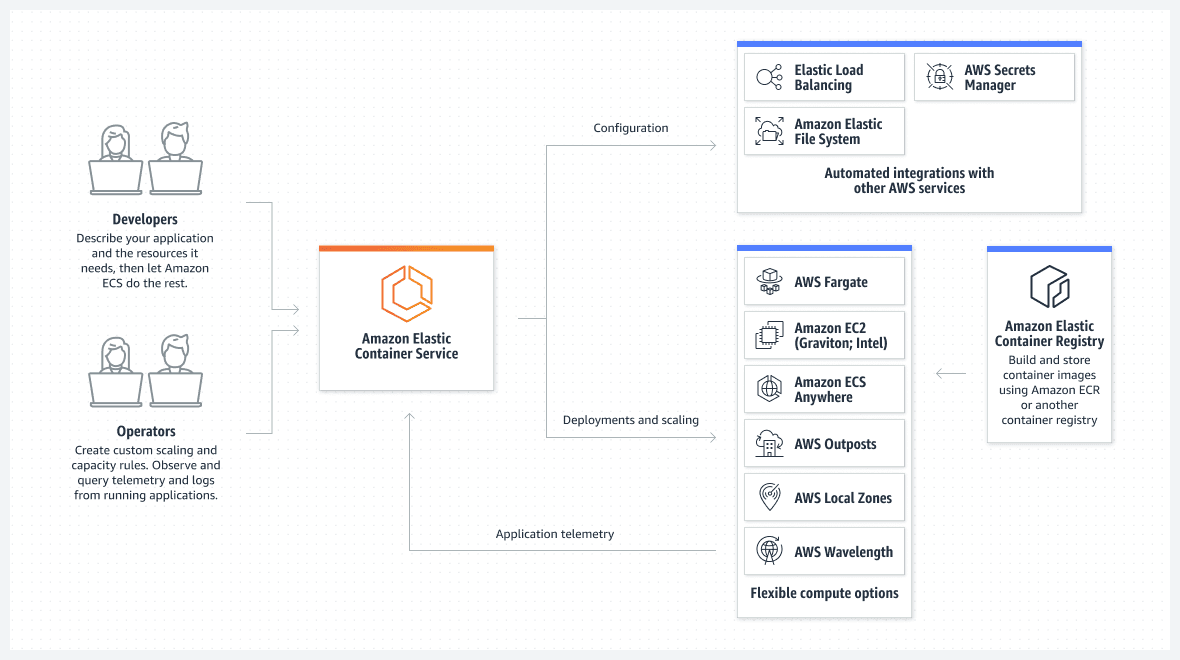

AWS ECS is a tool provided by Amazon that helps users run and manage applications made up of tiny, self-contained pieces called containers. Think of containers like small virtual machines that are capable of running code on command and have no extra bloat. ECS helps you organize and operate these blocks or containers without needing to manage the underlying systems they run on (like a virtual machine would). It also works seamlessly with other Amazon tools, allowing for things like balancing the workload among containers and storing container-related data. Essentially, with ECS, developers can focus on building their applications while Amazon handles the nitty-gritty of running them efficiently.

What is it (with all the lingo)

AWS ECS (Amazon Elastic Container Service) is a fully-managed container orchestration service provided by Amazon Web Services (AWS). It allows users to deploy, manage, and scale containerized applications using Docker on Amazon EC2 instances. ECS integrates with other AWS services like Elastic Load Balancing, Amazon ECR, and AWS Fargate, enabling automatic load balancing, storage, and serverless compute for containers. With ECS, developers can focus on building applications without worrying about the underlying infrastructure or container management details.

Why not kubernetes

Simply put, it is simpler and if you don't know if you need kubernetes, then ECS is probably plenty for you. AWS ECS and Kubernetes are both orchestration systems designed to manage containers, but they come with distinct characteristics and use-cases. Kubernetes, often referred to as K8s, is an open-source platform originally developed by Google, and it's become a de facto standard for container orchestration worldwide. Its open nature allows it to be platform agnostic, meaning it can run on various cloud providers or on-premises. Kubernetes offers a rich set of features, a vibrant community, and wide-ranging adaptability. On the other hand, AWS ECS is a proprietary service deeply integrated with the AWS ecosystem. It simplifies the process of deploying containers on AWS and offers a more straightforward approach for those already invested in the AWS environment. While Kubernetes provides flexibility and a broader feature set, ECS offers ease-of-use and tight integration with other AWS services. The choice between them often comes down to the specific requirements of a project and the environment in which it operates.

Define parameters for the task

Start by setting the parameters you want for the container to run

repo_name = 'example-container' # what can be found in ECR

task_name = repo_name + '-task' # could name it the same, I'm just adding a suffix

cluster_choice = 'my-cluster-example' # the cluster that you have in ECS. If using tasks, it is probably okay for you to start with just 1 cluster

# valid combos

# https://docs.aws.amazon.com/AmazonECS/latest/developerguide/task-cpu-memory-error.html

cpu_amount = 1 # cpu cores

memory_amount = 4 # gb of memory

# convert to expected format

cpu_amount_value = str(int(cpu_amount*1024))

memory_amount_value = str(int(memory_amount*1024))

Then create a task definition

A task definition in AWS ECS can be likened to a recipe in cooking. Just as a recipe details the ingredients, quantities, and instructions required to create a dish, a task definition specifies everything ECS needs to know to run a container, like the container image, memory, CPU requirements, environment variables, and other configurations. Think of it this way: If you're making a cake, the recipe will list out the exact amount of flour, eggs, sugar, and other ingredients you need, along with step-by-step baking instructions. Similarly, a task definition lists out the 'ingredients' and 'instructions' for ECS to 'bake' or run your container application correctly in its environment.

See below for Iam role information

# create task definition (think of this as the recipe for what to do )

def create_task_definition():

client = boto3.client("ecs", region_name="us-east-1")

try:

response = client.register_task_definition(

family=task_name, # name for groupings within ecs

taskRoleArn='arn:aws:iam::017387919623:role/ecs_task_execution_iam_role',

executionRoleArn='arn:aws:iam::017387919623:role/ecs_task_execution_iam_role',

networkMode='awsvpc',

containerDefinitions=[

{

'name': task_name,

'image': '017387919623.dkr.ecr.us-east-1.amazonaws.com/{repo_name_fill}:latest'.format(repo_name_fill = repo_name),

'portMappings': [

{

'containerPort': 5000,

'protocol': 'tcp'

},

],

'essential': True,

'logConfiguration': {

'logDriver': 'awslogs',

'options': {

'awslogs-group': f'/ecs/example-log-group',

'awslogs-region': 'us-east-1',

'awslogs-stream-prefix': 'ecs'

}

}

}

],

requiresCompatibilities=[

'FARGATE',

],

cpu=cpu_amount_value,

memory=memory_amount_value,

runtimePlatform={

'cpuArchitecture': 'ARM64', # this is cheaper if you can support ARM

'operatingSystemFamily': 'LINUX'

}

)

return response['taskDefinition']

except Exception as e:

print(str(e))

return False

create_task_definition()

Run the task.

Here you just need to define the networking to run on

# use the task definition and run it (execute the recipe)

client = boto3.client("ecs", region_name="us-east-1")

response = client.run_task(

taskDefinition=task_name,

launchType='FARGATE',

cluster=cluster_choice,

platformVersion='LATEST',

count=1,

networkConfiguration={

'awsvpcConfiguration': {

'subnets': [

'subnet-##########aaaaaaa',

'subnet-##########aaaaaaa',

'subnet-##########aaaaaaa',

'subnet-##########aaaaaaa',

'subnet-##########aaaaaaa',

'subnet-##########aaaaaaa'

],

'assignPublicIp': 'ENABLED',

'securityGroups': ["sg-#################"]

}

}

)

print(json.dumps(response, indent=4, default=str))